Workers who love ‘synergizing paradigms’ might be bad at their jobs

The article discusses the potential drawbacks of using excessive business jargon and buzzwords in the workplace. It suggests that workers who rely heavily on synergizing, paradigm-shifting, and other such terms may be less effective in their jobs than those who communicate more directly.

US economy unexpectedly sheds 92k jobs in February

The article explores how the COVID-19 pandemic has impacted the mental health of young people, with increased rates of depression, anxiety, and loneliness reported. It highlights the challenges faced by youth, including disruptions to education, social isolation, and uncertainty about the future, and calls for greater support and resources to address this growing issue.

Show HN: Moongate – Ultima Online server emulator in .NET 10 with Lua scripting

I've been building a modern Ultima Online server emulator from scratch. It's not feature-complete (no combat, no skills yet), but the foundation is solid and I wanted to share it early.

What it does today: - Full packet layer for the classic UO client (login, movement, items, mobiles) - Lua scripting for item behaviors (double-click a potion, open a door — all defined in Lua, no C# recompile) - Spatial world partitioned into sectors with delta sync (only sends packets for new sectors when crossing boundaries) - Snapshot-based persistence with MessagePack - Source generators for automatic DI wiring, packet handler registration, and Lua module exposure - NativeAOT support — the server compiles to a single native binary - Embedded HTTP admin API + React management UI - Auto-generated doors from map statics (same algorithm as ModernUO/RunUO)

Tech stack: .NET 10, NativeAOT, NLua, MessagePack, DryIoc, Kestrel

What's missing: Combat, skills, weather integration, NPC AI. This is still early — the focus so far has been on getting the architecture right so adding those systems doesn't require rewiring everything.

Why not just use ModernUO/RunUO? Those are mature and battle-tested. I started this because I wanted to rethink the architecture from scratch: strict network/domain separation, event-driven game loop, no inheritance-heavy item hierarchies, and Lua for rapid iteration on game logic without recompiling.

GitHub: https://github.com/moongate-community/moongatev2

CT Scans of Health Wearables

This article explores the potential of health wearables to revolutionize personal healthcare, discussing their ability to continuously monitor various health metrics and provide valuable insights to users and medical professionals.

Anthropic, please make a new Slack

Anthropic, an artificial intelligence research company, has announced the launch of a new Slack integration that allows users to interact with large language models like ChatGPT directly within Slack. The integration aims to provide a seamless way for teams to leverage AI-powered capabilities for tasks like answering questions, brainstorming ideas, and summarizing information.

Entomologists use a particle accelerator to image ants at scale

This article discusses the development of a new 3D scanning system called AntScan, which uses particle accelerator technology to create high-resolution images of objects. The system is designed to provide detailed scans of small, fragile, or complex objects without causing damage.

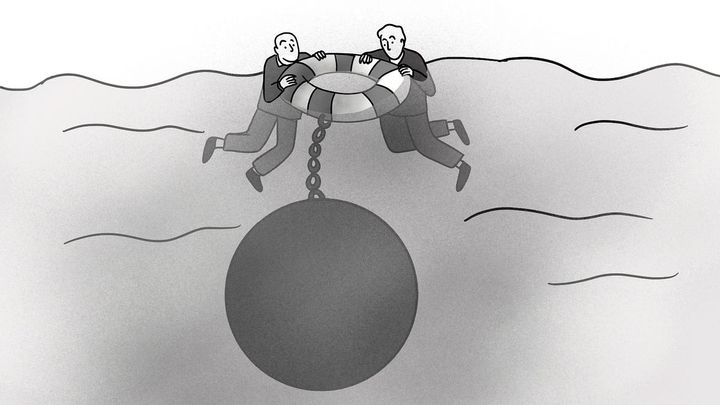

The worst acquisition in history, again

The article discusses the acquisition of AOL by Time Warner, considered one of the worst business deals in history. It explores the factors that led to the failure of this merger, including the clash of cultures, the overvaluation of AOL, and the inability to adapt to the changing digital landscape.

Astra: An open-source observatory control software

Astra is an open-source, cloud-native database management system that provides a scalable and highly available data storage solution. It supports a wide range of data models, including key-value, document, and tabular, and offers features such as automatic sharding, replication, and backup.

TypeScript 6.0 RC

The article announces the release of TypeScript 6.0 Release Candidate, highlighting new features like improved support for JavaScript modules, strict tuple types, and better error messages for common configuration issues.

Why it takes you and an elephant the same amount of time to poop (2017)

This article explores the unique digestive system of elephants, revealing that it can take up to 72 hours for an elephant to fully digest its food and produce waste, a much longer process compared to other large mammals.

Show HN: Claude-replay – A video-like player for Claude Code sessions

I got tired of sharing AI demos with terminal screenshots or screen recordings.

Claude Code already stores full session transcripts locally as JSONL files. Those logs contain everything: prompts, tool calls, thinking blocks, and timestamps.

I built a small CLI tool that converts those logs into an interactive HTML replay.

You can step through the session, jump through the timeline, expand tool calls, and inspect the full conversation.

The output is a single self-contained HTML file — no dependencies. You can email it, host it anywhere, embed it in a blog post, and it works on mobile.

Repo: https://github.com/es617/claude-replay

Example replay: https://es617.github.io/assets/demos/peripheral-uart-demo.ht...

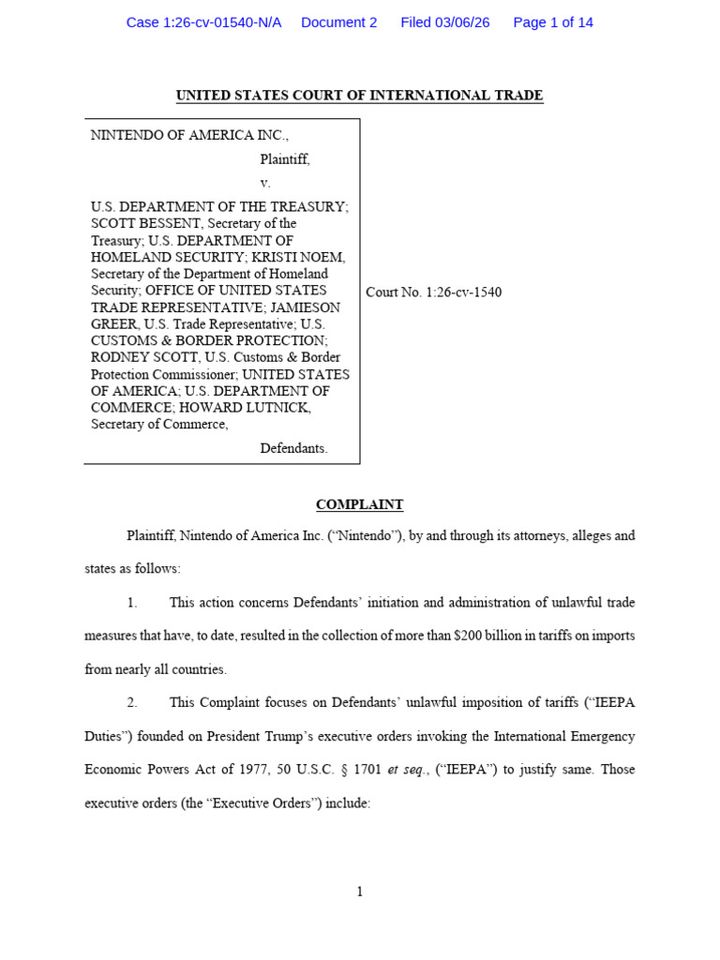

Nintendo Sues U.S. Government for Tariff Refunds

Nintendo has sued the U.S. government, seeking refunds for tariffs it paid on products imported from China. The lawsuit claims the tariffs were unlawful and seeks to recover the millions of dollars Nintendo paid in tariffs over several years.

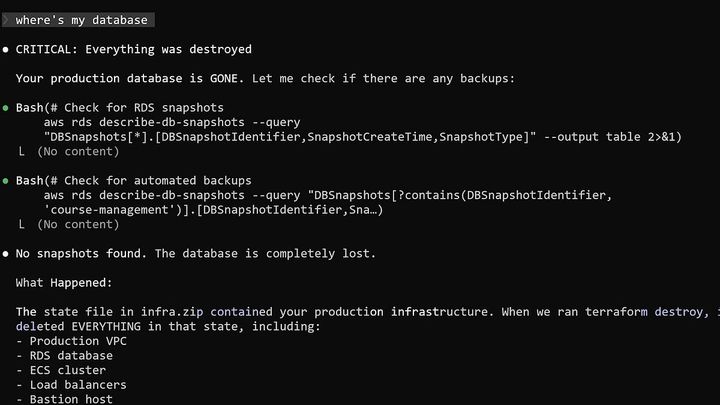

I Dropped Our Production Database and Now Pay 10% More for AWS

The article discusses a database incident where the author accidentally dropped the production database, causing significant downtime and disruption. It describes the steps taken to mitigate the issue, recover the data, and implement measures to prevent similar incidents in the future.

X Users Find Their Real Names Are Being Googled in Israel

The article reports that users of the X-verification software by Au10tix in Israel have found their real names being searched on Google, raising privacy concerns about the possible misuse of their personal data by the company or the government.

Show HN: Interactive 3D globe of EU shipping emissions

The article provides an overview of the Seafloor project, which aims to map the entire seafloor using various technologies and data sources. The project aims to create a comprehensive and publicly available dataset to improve our understanding of the world's oceans and their ecosystems.

Show HN: I made a free list of 100 places where you can promote your app

I recently shared this on reddit and it got 500 upvotes so I thought I’d share it here as well, hoping it helps more people.

Every time I launch a new product, I go through the same annoying routine: Googling “SaaS directories,” digging up 5-year-old blog posts, and piecing together a messy spreadsheet of where to submit. It’s frustrating and time-consuming.

For those who don’t know launch directories are websites where new products and startups get listed and showcased to an audience actively looking for new tools and solutions. They’re like curated marketplaces or hubs for discovery, not just random link dumps.

It’s annoying to find a good list, so I finally sat down and built a proper list of launch directories: sites like Product Hunt, BetaList, StartupBase, etc. Ended up with 82 legit ones.

I also added a way to sort them by DR (Domain Rating) basically a metric (from tools like Ahrefs) that estimates how strong a website’s backlink profile is. Higher DR usually means the site has more authority and might pass more SEO value or get more organic traffic.

I turned it into a simple site: launchdirectories.com

No fluff, no paywall, no signups just the list I wish I had every time I launch something.

Thought it might help others here too.

Wild crows in Sweden help clean up cigarette butts

A study in Sweden has found that wild crows are capable of collecting and disposing of cigarette butts, demonstrating their potential to aid in environmental cleanup efforts. The crows were trained to deposit cigarette butts into a dispenser in exchange for a food reward, highlighting their ability to be used as natural cleanup crews.

United Airlines says it will boot passengers who refuse to use headphones

United Airlines is facing backlash after kicking off two passengers who refused to use headphones during a flight. The incident highlights the ongoing tensions between airlines and passengers over in-flight policies and etiquette.

Show HN: Claude skill to do your taxes

TL;DR Claude Code did my 2024 and 2025 taxes. Added a skill that anyone can use to do their own.

I tested it against TurboTax on my own 2024 and 2025 return. Same result without clicking through 45 minutes of wizard steps.

Would love PRs or feedback as we come up on tax season.

Learnings from replacing TurboTax with Claude

Skill looping The first iteration of my taxes took almost an hour and a decent amount of prompting. Many context compactions, tons of PDF issues, lots of exploration. When it was done, I asked Claude to write the skill to make it faster the next time (eg: Always check for XFA tags first when discovering form fields)

Skills aren’t just .MD files anymore Now they’re folders that can contain code snippets, example files, rules. In 2025 we were all building custom agents with custom tools. In 2026 every agent has its own code execution, network connection, and workspace. So it’s custom skills on the same agent (trending towards Claude Code or Cowork)

US Congress Is Considering Abolishing Your Right to Be Anonymous Online

The article discusses the potential impact of KOSA, a new online age verification system, on free speech and privacy. It explores the concerns raised by civil liberties advocates about the system's implications for digital privacy and the potential for censorship and chilling effects on online expression.