US economy unexpectedly sheds 92k jobs in February

The article explores how the COVID-19 pandemic has impacted the mental health of young people, with increased rates of depression, anxiety, and loneliness reported. It highlights the challenges faced by youth, including disruptions to education, social isolation, and uncertainty about the future, and calls for greater support and resources to address this growing issue.

Workers who love ‘synergizing paradigms’ might be bad at their jobs

The article discusses the potential drawbacks of using excessive business jargon and buzzwords in the workplace. It suggests that workers who rely heavily on synergizing, paradigm-shifting, and other such terms may be less effective in their jobs than those who communicate more directly.

Hardening Firefox with Anthropic's Red Team

The bugs are the ones that say "using Claude from Anthropic" here: https://www.mozilla.org/en-US/security/advisories/mfsa2026-1...

https://blog.mozilla.org/en/firefox/hardening-firefox-anthro...

https://www.wsj.com/tech/ai/send-us-more-anthropics-claude-s...

Show HN: Moongate – Ultima Online server emulator in .NET 10 with Lua scripting

I've been building a modern Ultima Online server emulator from scratch. It's not feature-complete (no combat, no skills yet), but the foundation is solid and I wanted to share it early.

What it does today: - Full packet layer for the classic UO client (login, movement, items, mobiles) - Lua scripting for item behaviors (double-click a potion, open a door — all defined in Lua, no C# recompile) - Spatial world partitioned into sectors with delta sync (only sends packets for new sectors when crossing boundaries) - Snapshot-based persistence with MessagePack - Source generators for automatic DI wiring, packet handler registration, and Lua module exposure - NativeAOT support — the server compiles to a single native binary - Embedded HTTP admin API + React management UI - Auto-generated doors from map statics (same algorithm as ModernUO/RunUO)

Tech stack: .NET 10, NativeAOT, NLua, MessagePack, DryIoc, Kestrel

What's missing: Combat, skills, weather integration, NPC AI. This is still early — the focus so far has been on getting the architecture right so adding those systems doesn't require rewiring everything.

Why not just use ModernUO/RunUO? Those are mature and battle-tested. I started this because I wanted to rethink the architecture from scratch: strict network/domain separation, event-driven game loop, no inheritance-heavy item hierarchies, and Lua for rapid iteration on game logic without recompiling.

GitHub: https://github.com/moongate-community/moongatev2

CT Scans of Health Wearables

This article explores the potential of health wearables to revolutionize personal healthcare, discussing their ability to continuously monitor various health metrics and provide valuable insights to users and medical professionals.

We might all be AI engineers now

The article discusses the growing importance of AI in various industries and how more people are becoming AI engineers, even those without a traditional computer science background. It explores the increasing accessibility and widespread adoption of AI tools and platforms, allowing non-experts to participate in AI-related tasks and projects.

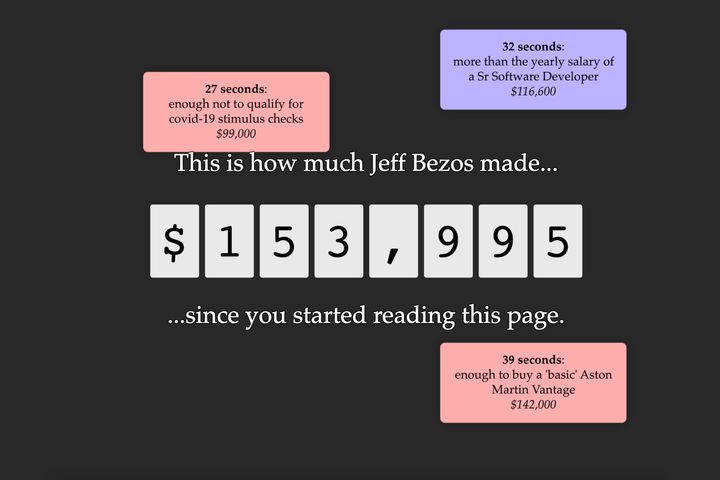

How Much Money Jeff Bezos Made Since You Started Reading This Page

This article provides a calculator to estimate the wealth growth of Amazon founder Jeff Bezos, allowing users to input various parameters and see projections of his future net worth based on Amazon stock performance and other factors.

Astra: An open-source observatory control software

Astra is an open-source, cloud-native database management system that provides a scalable and highly available data storage solution. It supports a wide range of data models, including key-value, document, and tabular, and offers features such as automatic sharding, replication, and backup.

Entomologists use a particle accelerator to image ants at scale

This article discusses the development of a new 3D scanning system called AntScan, which uses particle accelerator technology to create high-resolution images of objects. The system is designed to provide detailed scans of small, fragile, or complex objects without causing damage.

Elite Overproduction

The article discusses the concept of elite overproduction, which refers to the excessive production of highly educated individuals in society. It explores the potential social and economic consequences of this phenomenon, such as underemployment and social unrest.

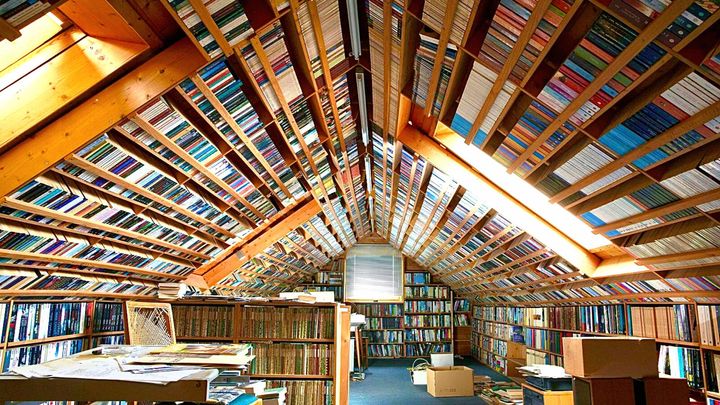

70k Books Found in Hidden Library in This Germany Home (2023)

A hidden library in a German home was discovered, containing over 70,000 books, some dating back to the 16th century. The vast collection, which was kept secret for decades, offers a unique glimpse into the private book holdings of a bibliophile family.

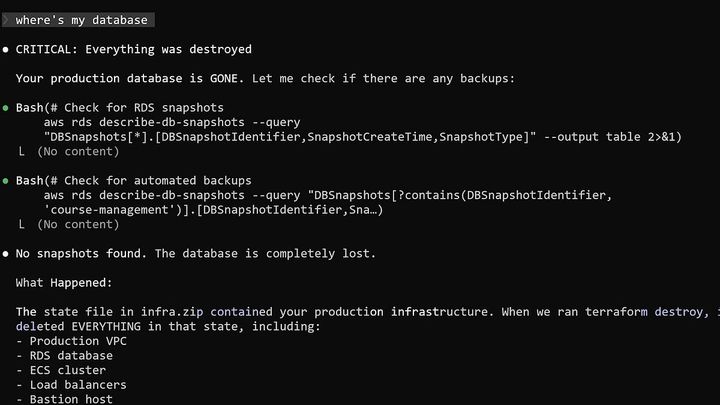

I Dropped Our Production Database and Now Pay 10% More for AWS

The article discusses a database incident where the author accidentally dropped the production database, causing significant downtime and disruption. It describes the steps taken to mitigate the issue, recover the data, and implement measures to prevent similar incidents in the future.

Show HN: Claude-replay – A video-like player for Claude Code sessions

I got tired of sharing AI demos with terminal screenshots or screen recordings.

Claude Code already stores full session transcripts locally as JSONL files. Those logs contain everything: prompts, tool calls, thinking blocks, and timestamps.

I built a small CLI tool that converts those logs into an interactive HTML replay.

You can step through the session, jump through the timeline, expand tool calls, and inspect the full conversation.

The output is a single self-contained HTML file — no dependencies. You can email it, host it anywhere, embed it in a blog post, and it works on mobile.

Repo: https://github.com/es617/claude-replay

Example replay: https://es617.github.io/assets/demos/peripheral-uart-demo.ht...

U.S. Capabilities Are Showing Signs of Rot

This article explores the potential military failures of the Trump administration's approach to Iran, including the risks of escalation, the unintended consequences of confrontational policies, and the need for a more nuanced and strategic approach to foreign policy in the region.

Async Programming Is Just Inject Time

The article discusses the use of async/await and the async/inject and async/effects patterns in web development. It explores how these patterns can help manage asynchronous code and improve the overall structure and maintainability of web applications.

First MacBook Neo Benchmarks Are In

The article discusses the first benchmarks of the rumored 'MacBook Neo' device, which appears to be a new Apple laptop powered by an unannounced in-house processor. The benchmarks suggest the MacBook Neo could offer significant performance improvements over current Apple Silicon-based Macs.

Show HN: Interactive 3D globe of EU shipping emissions

The article provides an overview of the Seafloor project, which aims to map the entire seafloor using various technologies and data sources. The project aims to create a comprehensive and publicly available dataset to improve our understanding of the world's oceans and their ecosystems.

United Airlines says it will boot passengers who refuse to use headphones

United Airlines is facing backlash after kicking off two passengers who refused to use headphones during a flight. The incident highlights the ongoing tensions between airlines and passengers over in-flight policies and etiquette.

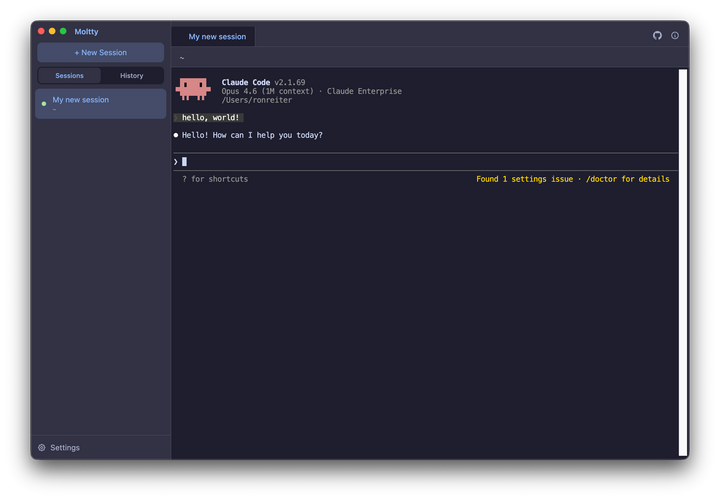

Show HN: Moltty – Organized, Persistent AI Coding Sessions

Moltty is a web-based platform that allows users to create, manage, and share interactive presentations and documents. The platform offers features such as real-time collaboration, multimedia integration, and various presentation templates to enhance the creation and delivery of digital content.

US Congress Is Considering Abolishing Your Right to Be Anonymous Online

The article discusses the potential impact of KOSA, a new online age verification system, on free speech and privacy. It explores the concerns raised by civil liberties advocates about the system's implications for digital privacy and the potential for censorship and chilling effects on online expression.