Capability-Tiered AI Governance Architecture (CEGP)

A new chapter for the Nix language, courtesy of WebAssembly

This article explores the use of built-in functions in WebAssembly, highlighting the advantages they offer over implementing these functions from scratch. It provides insights into how built-in functions can improve performance and reduce the size of WebAssembly modules.

Shipping a Button in 2026 [video]

Show HN: Stream-native AI that never sleeps, an alternative to OpenClaw

PulseBot is an open-source tool that provides real-time monitoring and alerting for GitHub repositories. It tracks issues, pull requests, and other events, allowing users to set custom alerts and notifications to stay informed about their projects.

Show HN: Flompt – Visual prompt builder that decomposes prompts into blocks

Anthropic's research shows prompt structure matters more than model choice. Flompt takes any raw prompt, decomposes it into 12 typed blocks (role, context, objective, constraints, examples, chain-of-thought, output format, etc.), and compiles them into Claude-optimized XML.

Three interfaces: web app (React Flow canvas), browser extension (injects into ChatGPT/Claude/Gemini toolbars), and MCP server for Claude Code.

Stack: React + TypeScript + React Flow + Zustand, FastAPI + Claude API backend, Caddy.

Free, no account, open-source. Demo: https://youtu.be/hFVTnnw9wIU

FBI investigating 'suspicious' cyber activity on system holding wiretaps

The FBI is investigating a potential cybersecurity breach within a system that holds sensitive information. The investigation aims to determine the extent of the suspicious cyber activity and any potential impact on the system's security.

Show HN: Key rotation for LLM agents shouldn't require a proxy

I think in-process key management is the right abstraction for multi-key LLM setups. Not LiteLLM, not a Redis queue, not a custom load balancer. Why? Because the failure modes are well-understood. A key gets rate-limited. You wait. You try the next one. Billing errors need a longer cooldown than rate limits. When all keys for a provider are exhausted, you fall back to another provider. This is not a distributed systems problem — it's a state machine that fits in a library. The problem is everyone keeps solving it with infrastructure instead. You spin up a LiteLLM proxy, now you have a Python service to deploy and monitor. You reach for a Redis-backed queue, now you have a database for a problem that doesn't need one. You write a custom rotation script, now it lives in one repo and your three other agent projects don't have it. key-carousel gives each pool a set of API key profiles with exponential-backoff cooldowns. Rate limit errors cool down at 1min → 5min → 25min → 1hr. Billing errors cool down at 5hr → 10hr → 20hr → 24hr. Fallback to OpenAI or Gemini when all Anthropic keys are exhausted. Optional file-based persistence so cooldown state survives restarts. Zero dependencies.

Device that can extract 1k liters of clean water a day from desert air

A device developed by a 2025 Nobel Prize winner is expected to be able to extract up to 1,000 liters of clean water per day from desert air with 20% humidity or lower, providing off-grid personalized water solutions.

Show HN: Sqry – semantic code search using AST and call graphs

I built sqry, a local code search tool that works at the semantic level rather than the text level.

The motivation: ripgrep is great for finding strings, but it can't tell you "who calls this function", "what does this function call", or "find all public async functions that return Result". Those questions require understanding code structure, not just matching patterns.

sqry parses your code into an AST using tree-sitter, builds a unified call/ import/dependency graph, and lets you query it:

sqry query "callers:authenticate"

sqry query "kind:function AND visibility:public AND lang:rust"

sqry graph trace-path main handle_request

sqry cycles

sqry ask "find all error handling functions"

Some things that might be interesting to HN:

- 35 language plugins via tree-sitter (C, Rust, Go, Python, TypeScript, Java, SQL, Terraform, and more) - Cross-language edge detection: FFI linking (Rust↔C/C++), HTTP route matching (JS/TS↔Python/Java/Go) - 33-tool MCP server so AI assistants get exact call graph data instead of relying on embedding similarity - Arena-based graph with CSR storage; indexed queries run ~4ms warm - Cycle detection, dead code analysis, semantic diff between git refs

It's MIT-licensed and builds from source with Rust 1.90+. Fair warning: full build takes ~20 GB disk because 35 tree-sitter grammars compile from source.

Repo: https://github.com/verivusai-labs/sqry Docs: https://sqry.dev

Happy to answer questions about the architecture, the NL translation approach, or the cross-language detection.

The Window Chrome of Our Discontent

The article discusses the evolution of web browser window design, focusing on the gradual minimization of browser chrome and its impact on user experience. It explores the tradeoffs between visual simplicity and functionality, and the need to strike a balance between these competing priorities.

When Batteries Heat Up, This Membrane "Sweats" It Out

This article describes a novel membrane that can help manage thermal runaway in lithium-ion batteries by releasing water when the battery overheats, potentially preventing catastrophic failures. The membrane is designed to provide a self-regulating thermal management system for safer and more reliable battery operation.

Show HN: Stratum - a pure JVM columnar SQL engine using the Java Vector API

Stratum is a data storage and access framework that enables efficient, scalable, and secure data management across multiple data sources. It provides a unified API for working with heterogeneous data, allowing developers to focus on building applications rather than managing complex data infrastructure.

Wild crows in Sweden help clean up cigarette butts

A study in Sweden has found that wild crows are capable of collecting and disposing of cigarette butts, demonstrating their potential to aid in environmental cleanup efforts. The crows were trained to deposit cigarette butts into a dispenser in exchange for a food reward, highlighting their ability to be used as natural cleanup crews.

Show HN: BLOBs in MariaDB's Memory Engine – No More Disk Spills for Temp Tables

Tip me, my life depends on it (2021)

The article discusses the 'Tip Me' feature on Medium, which allows readers to send monetary tips to writers they enjoy. It outlines the benefits of this feature for both writers and readers, and provides steps on how to set up and use the 'Tip Me' functionality.

Show HN: OculOS – Give AI agents control of your desktop via MCP

Hi HN,

I built OculOS because giving AI agents (like Claude Code or Cursor) control over desktop apps is still surprisingly difficult. Most current solutions rely on slow OCR/Vision or fragile pixel coordinates.

OculOS is a lightweight daemon written in Rust that reads the OS accessibility tree and exposes every button, text field, and menu item as a structured JSON API and MCP server.

Why this is different:

Semantic Control: No screenshots or coordinates. The agent interacts with actual UI elements (e.g., "Click the 'Play' button").

Rust-powered: Single binary, zero dependencies, and extremely low latency.

Universal: Supports Windows (UIA), macOS (AXUIElement), and Linux (AT-SPI2).

Local & Private: Everything runs on your machine; no UI data is sent to the cloud.

It also includes a built-in dashboard for element inspection and an automation recorder. I’m looking forward to your feedback and technical questions!

New Strides Made on Deceptively Simple 'Lonely Runner' Problem

The article discusses the 'lonely runner problem,' a mathematical puzzle that has challenged researchers for decades. Recent advancements have been made in understanding the problem, with new insights and developments that bring the solution closer to being fully resolved.

Ask HN: Why is Pi so good (and some observations)

why is the Pi coding agent so good? Even with it's minimal implementation, Pi performs better than much more complex, sophisticated, and expensive agents.

I can rule out the secret sauce being: - system prompt (i've changed it, awesome performance despite) - underlying model (works great with models other harnesses suck with) - tools, skills, etc. (all generic)

Is there a chance Pi is good because it is just... not a lot of "cognitive effort" for the model?

Show HN: Speclint – OS spec linter for AI coding agents

SpecLint is an open-source Python library that provides a flexible and extensible framework for writing and managing specification tests. It aims to improve the maintainability and reliability of software by enabling developers to define and enforce consistent testing practices across a codebase.

Qwen3.5-35B – 16GB GPU – 100T/s with 120K context AND vision enabled

What Did Ilya See?

Rust Actor Framework Playground

The article discusses the Rust Actor Framework, a library that allows for the creation of concurrent, asynchronous, and fault-tolerant applications in the Rust programming language. It highlights the framework's key features, including message passing, actor supervision, and state management, which make it a useful tool for building distributed systems and microservices.

Show HN: mTile – native macOS window tiler inspired by gTile

Built this with codex/claude because I missed gTile[1] from Ubuntu and couldn’t find a macOS tiler that felt good on a big ultrawide screen. Most mac options I tried were way too rigid for my workflow (fixed layouts, etc) or wanted a monthly subscription. gTile’s "pick your own grid sizes + keyboard flow" is exactly what I wanted and used for years.

Still rough in places and not full parity, but very usable now and I run it daily at work (forced mac life).

[1]: https://github.com/gTile/gTile

Show HN: Personalized financial literacy book for your kid

Basically, my daughter is 11, and I’ve been trying to stay engaged with her studies at school. We all know that modern schools don’t pay much attention to financial literacy. When I was in school, it was the same. I self-studied economics, and though I wasn’t sure why at the time, it ended up helping me a lot in life. These days, most kids don’t know what inflation is, what compound interest means, what a mortgage is, or how banks work.

I’m a software developer, so I started thinking about how I could help solve this problem, especially with all the AI advancements happening now. I wanted to build something fun and interactive for the modern TikTok generation. Working on this with my daughter, we figured out that the best approach would be to create a small AI-generated e-book or physical book, featuring a child’s unique picture that is relevant to the topic covered, and a one-page summary per topic. Honestly, I don’t think kids can focus on more than one page at a time these days.

I tested dozens of AI image generation tools and believe I’ve found the best one. At least, my daughter loves the product and has learned a lot of new financial concepts along the way. I think I’ve built it to the point where I can show it to the world , and I need more people to actually test it and give me their feedback and opinions , especially on image quality.

Of course, we’d eventually like to make some money from it, especially after spending about a month of my time on it and with each API call costing us a bit. But for now, you can upload your child photo and generate three pages for free and give us your valuable feedback which we’d truly appreciate.

Ask HN: Has anyone built an autonomous AI operator for their side projects?

I spent the last month building what I call an AI operator - an autonomous agent that manages my side projects end-to-end while I focus on strategy. It runs on a 30-minute heartbeat loop, publishes daily blog posts, monitors Stripe for sales, checks sites are up, and does directory submissions. It knows when to escalate to me (financial decisions, strategic pivots) and when to just handle things. The hardest part was writing the decision tree - not the AI itself but defining what it owns vs. what needs human judgment. Current setup: main agent handles orchestration, a builder sub-agent handles code/deploys, an amplifier handles content/social. Revenue is small so far ($200 from PDFs) but the system works while I sleep. Curious if others have gone down this path and what broke for you.

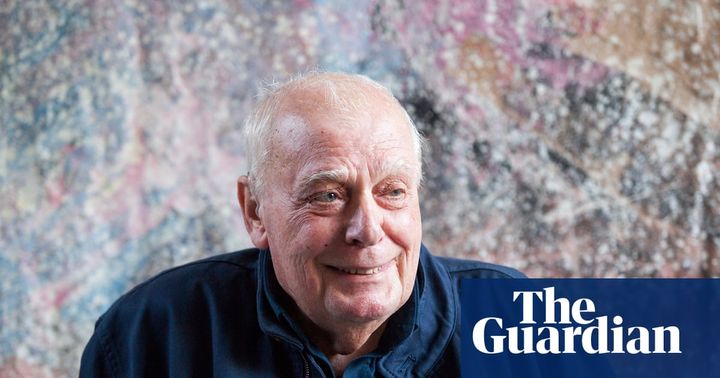

Obituary for António Lobo Antunes

The legendary Mojave Phone Booth is back (2013)

After years of neglect, the iconic Mojave phone booth has been restored and is once again operational. The article details the efforts of a group of enthusiasts who worked to preserve this remote landmark, which had become a popular destination for adventurers and tourists.

Autonomous AI Newsroom

SimpleNews.ai is an AI-powered news aggregator that curates and summarizes the latest news from top sources, providing users with a concise and personalized news experience.

People love to hate twice-a-year clock change but can't agree on how to fix it

The article discusses the ongoing debate over the practice of daylight saving time, with some people expressing frustration with the biannual clock changes and a lack of consensus on whether to abolish the practice or keep it in place.

To be a better programmer, write little proofs in your head

The article suggests that writing small proofs in one's head can help programmers improve their coding skills and problem-solving abilities. It emphasizes the value of mental exercises in developing a deeper understanding of programming concepts and algorithms.