Ada 2022

The article provides an overview of the Ada 2022 programming language standard, which is the latest version of the Ada language. It highlights the key features and improvements introduced in the new standard, including enhanced support for concurrency, real-time systems, and object-oriented programming.

Why it takes you and an elephant the same amount of time to poop (2017)

This article explores the unique digestive system of elephants, revealing that it can take up to 72 hours for an elephant to fully digest its food and produce waste, a much longer process compared to other large mammals.

Anthropic, Please Make a New Slack

Anthropic, an artificial intelligence research company, has announced the launch of a new Slack integration that allows users to interact with large language models like ChatGPT directly within Slack. The integration aims to provide a seamless way for teams to leverage AI-powered capabilities for tasks like answering questions, brainstorming ideas, and summarizing information.

Tech employment now significantly worse than the 2008 or 2020 recessions

Show HN: Moongate – Ultima Online server emulator in .NET 10 with Lua scripting

I've been building a modern Ultima Online server emulator from scratch. It's not feature-complete (no combat, no skills yet), but the foundation is solid and I wanted to share it early.

What it does today: - Full packet layer for the classic UO client (login, movement, items, mobiles) - Lua scripting for item behaviors (double-click a potion, open a door — all defined in Lua, no C# recompile) - Spatial world partitioned into sectors with delta sync (only sends packets for new sectors when crossing boundaries) - Snapshot-based persistence with MessagePack - Source generators for automatic DI wiring, packet handler registration, and Lua module exposure - NativeAOT support — the server compiles to a single native binary - Embedded HTTP admin API + React management UI - Auto-generated doors from map statics (same algorithm as ModernUO/RunUO)

Tech stack: .NET 10, NativeAOT, NLua, MessagePack, DryIoc, Kestrel

What's missing: Combat, skills, weather integration, NPC AI. This is still early — the focus so far has been on getting the architecture right so adding those systems doesn't require rewiring everything.

Why not just use ModernUO/RunUO? Those are mature and battle-tested. I started this because I wanted to rethink the architecture from scratch: strict network/domain separation, event-driven game loop, no inheritance-heavy item hierarchies, and Lua for rapid iteration on game logic without recompiling.

GitHub: https://github.com/moongate-community/moongatev2

Hardening Firefox with Anthropic's Red Team

The bugs are the ones that say "using Claude from Anthropic" here: https://www.mozilla.org/en-US/security/advisories/mfsa2026-1...

https://blog.mozilla.org/en/firefox/hardening-firefox-anthro...

https://www.wsj.com/tech/ai/send-us-more-anthropics-claude-s...

Open Camera is a FOSS Camera App for Android

OpenCamera is an open-source camera application for Android devices that provides advanced camera features and controls, including support for external cameras, RAW image capture, and manual exposure settings.

Apache Otava

Launch HN: Palus Finance (YC W26): Better yields on idle cash for startups, SMBs

Hi HN! We’re Sam and Michael from Palus Finance (https://palus.finance). We’re building a treasury management platform for startups and SMBs to earn higher yields with a high-yield bond portfolio.

We were funded by YC for a consumer-focused product for higher-yield savings. But when we joined YC and got our funding, we realized we needed the product for our own startup’s cash reserves, and other startups in the batch started telling us they wanted this too.

We realized that traditional startup treasury products do much the same thing: open a brokerage account, sweep your cash into a money market fund (MMF), and charge a management fee. No strategy involved. (There is actually one widely-advertised treasury product that differentiates on yield, but instead of an MMF it uses a mutual fund where your principal is at considerable risk – it had a 9% loss in 2022 that took years to recover.)

I come from a finance background, so this norm felt weird to me. The typical startup cashflow pattern is a large infusion from a raise covering 18–24 months of burn, drawn down gradually. That's a lot of capital sitting idle for a long time, where even a modest yield improvement compounds into real money.

MMFs are the lowest rung of what's available in fixed income. Yes, they’re very safe and liquid, but when you leave your whole treasury in one, you’re giving up yield to get same-day liquidity on cash you won’t touch for six months or more. Big companies have treasury teams that actively manage their holdings and invest in a range of safe assets to maximize yield. But those sophisticated bond portfolios were just never made accessible to startups. That’s what we’re building.

Our bond portfolio holds short-duration floating-rate agency mortgage-backed securities (MBS), which are an ideal, safe, high-yielding asset for long-term startup cash reserves under most circumstances.[1]

The bond portfolio is managed by Regan Capital, which runs MBSF, the largest floating-rate agency MBS ETF in the country. Right now we're using MBSF to generate yields for customers (you can see its historical returns, including dividends, here: https://totalrealreturns.com/n/USDOLLAR,MBSF). We're working with Regan to set up a dedicated account with the same strategy, which will let us reduce fees and give each startup direct ownership of the underlying securities. All assets are held with an SEC-licensed custodian.

Based on historical returns, we target 4.5–5% returns vs. roughly 3.5% from most money market funds.[2] Liquidity is typically available in 1-2 business days. We will charge a flat 0.25% annual fee on AUM, compared to the 0.15–0.60%, depending on balance, charged by other treasury providers.

We think that startup banking products themselves (Brex, Mercury, etc.) are genuinely good at what they do: payments, payroll, card management. The problem is the treasury product bundled with them, not the bank. So rather than building another neobank, we built Palus to connect to your existing bank account via Plaid. Our goal was to create the simplest possible UX for this product: two buttons and a giant number that goes up.

See here: https://www.youtube.com/watch?v=8Q_gwSqtnxM

We are live with early customers from within YC, and accepting new customers on a rolling basis; you can sign up at https://palus.finance/.

We'd love feedback from founders who've thought about idle cash management or people with a background in fixed-income and structured products. Happy to go deep in the comments.

[1] Agency MBS are pools of residential mortgages guaranteed by federal government agencies (Ginnie Mae, Fannie Mae, and Freddie Mac). It's a $9T market with the same government backing and AAA/AA+ rating as the Treasuries in a money market fund. No investor has ever lost money in agency MBS due to borrower default.

It's worth acknowledging that many people associate “mortgage-backed securities” with the 2008 financial crisis. But the assets that blew up in 2008 were private-label MBS, bundles of risky subprime mortgages without federal guarantees. Agency MBS holders suffered no credit losses during the crisis, and post-2008 underwriting standards became even stricter. If anything, 2008 was evidence for the safety of agency MBS, not against it.

The agency guarantee eliminates credit risk. Our short-duration, floating-rate strategy addresses the other main risk: price risk. Fixed-rate bonds lose value when rates rise, but floating-rate bonds reset their coupon based on the SOFR benchmark, protecting against interest rate movements.

[2] This comes from the historical spread between MMFs and floating-rate agency MBS; MMFs typically pay very close to SOFR, while the MBS pay SOFR + 1 to 1.5%. This means that if the Federal Reserve changes interest rates and SOFR moves, both asset types will move by about the same amount, and that 1-1.5% premium will remain.

This post is for educational purposes only and does not constitute financial, investment, or legal advice. Past performance does not guarantee future results. Yields and spreads referenced are approximate and based on historical data.

Payphone Go

The article explores the revival of payphones in the digital age, highlighting how a startup called Payphone Go is repurposing old payphones as Wi-Fi hotspots and interactive kiosks to provide internet access and local information to communities.

CT Scans of Health Wearables

This article explores the potential of health wearables to revolutionize personal healthcare, discussing their ability to continuously monitor various health metrics and provide valuable insights to users and medical professionals.

Triplet Superconductor

Astra: An open-source observatory control software

Astra is an open-source, cloud-native database management system that provides a scalable and highly available data storage solution. It supports a wide range of data models, including key-value, document, and tabular, and offers features such as automatic sharding, replication, and backup.

TSA leaves passenger needing surgery after illegally forcing her through scanner

The article reports on a passenger who was forced by the TSA to go through a body scanner, despite having a spinal cord implant that made this unsafe. This resulted in the passenger suffering injuries that required surgery.

Multifactor (YC F25) Is Hiring an Engineering Lead

Multifactor, a YC-backed startup, is seeking an experienced Engineering Lead to join their team and help build a cutting-edge software platform that aims to revolutionize how businesses manage their financial operations.

Entomologists use a particle accelerator to image ants at scale

This article discusses the development of a new 3D scanning system called AntScan, which uses particle accelerator technology to create high-resolution images of objects. The system is designed to provide detailed scans of small, fragile, or complex objects without causing damage.

LibreSprite – open-source pixel art editor

LibreSprite is an open-source, cross-platform sprite editor and pixel art tool that provides a wide range of features for creating and manipulating 2D graphics, with a focus on pixel art and animation.

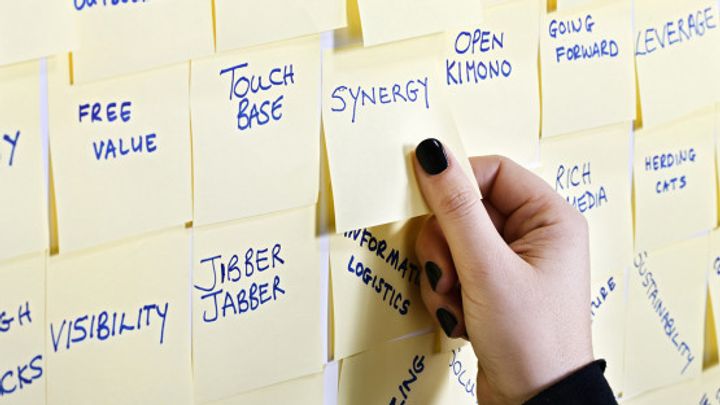

Workers who love ‘synergizing paradigms’ might be bad at their jobs

The article discusses the potential drawbacks of using excessive business jargon and buzzwords in the workplace. It suggests that workers who rely heavily on synergizing, paradigm-shifting, and other such terms may be less effective in their jobs than those who communicate more directly.

Analytic Fog Rendering with Volumetric Primitives (2025)

The article discusses a novel approach to rendering analytic fog using volumetric primitives, which allows for realistic and efficient depiction of atmospheric effects in real-time 3D graphics applications.

Good Bad ISPs

This article provides an overview of internet service providers (ISPs) that are considered 'good' or 'bad' for Tor relays, based on community feedback. It highlights factors like bandwidth limits, censorship, and ISP policies that can impact the performance and reliability of Tor relays.

A tool that removes censorship from open-weight LLMs

Global warming has accelerated significantly

The article presents evidence that global warming has accelerated significantly in recent years, with global temperatures rising at a faster rate than previously observed. It discusses the potential causes and implications of this rapid climate change.

Show HN: Claude-replay – A video-like player for Claude Code sessions

I got tired of sharing AI demos with terminal screenshots or screen recordings.

Claude Code already stores full session transcripts locally as JSONL files. Those logs contain everything: prompts, tool calls, thinking blocks, and timestamps.

I built a small CLI tool that converts those logs into an interactive HTML replay.

You can step through the session, jump through the timeline, expand tool calls, and inspect the full conversation.

The output is a single self-contained HTML file — no dependencies. You can email it, host it anywhere, embed it in a blog post, and it works on mobile.

Repo: https://github.com/es617/claude-replay

Example replay: https://es617.github.io/assets/demos/peripheral-uart-demo.ht...

Show HN: A trainable, modular electronic nose for industrial use

Hi HN,

I’m part of the team building Sniphi.

Sniphi is a modular digital nose that uses gas sensors and machine-learning models to convert volatile organic compound (VOC) data into a machine-readable signal that can be integrated into existing QA, monitoring, or automation systems. The system is currently in an R&D phase, but already exists as working hardware and software and is being tested in real environments.

The project grew out of earlier collaborations with university researchers on gas sensors and odor classification. What we kept running into was a gap between promising lab results and systems that could actually be deployed, integrated, and maintained in real production environments.

One of our core goals was to avoid building a single-purpose device. The same hardware and software stack can be trained for different use cases by changing the training data and models, rather than the physical setup. In that sense, we think of it as a “universal” electronic nose: one platform, multiple smell-based tasks.

Some design principles we optimized for:

- Composable architecture: sensor ingestion, ML inference, and analytics are decoupled and exposed via APIs/events

- Deployment-first thinking: designed for rollout in factories and warehouses, not just controlled lab setups

- Cloud-backed operations: model management, monitoring, updates run on Azure, which makes it easier to integrate with existing industrial IT setups

- Trainable across use cases: the same platform can be retrained for different classification or monitoring tasks without redesigning the hardware

One public demo we show is classifying different coffee aromas, but that’s just a convenient example. In practice, we’re exploring use cases such as:

- Quality control and process monitoring

- Early detection of contamination or spoilage

- Continuous monitoring in large storage environments (e.g. detecting parasite-related grain contamination in warehouses)

Because this is a hardware system, there’s no simple way to try it over the internet. To make it concrete, we’ve shared:

- A short end-to-end demo video showing the system in action (YouTube)

- A technical overview of the architecture and deployment model: https://sniphi.com/

At this stage, we’re especially interested in feedback and conversations with people who:

- Have deployed physical sensors at scale

- Have run into problems that smell data might help with

- Are curious about piloting or testing something like this in practice

We’re not fundraising here. We’re mainly trying to learn where this kind of sensing is genuinely useful and where it isn’t.

Happy to answer technical questions.

Paul Brainerd, founder of Aldus PageMaker, has died

The article discusses the life and legacy of Paul Brainerd, the founder of Aldus Corporation and the creator of the PageMaker desktop publishing software, which revolutionized the printing industry in the 1980s and 1990s.

We might all be AI engineers now

The article discusses the growing importance of AI in various industries and how more people are becoming AI engineers, even those without a traditional computer science background. It explores the increasing accessibility and widespread adoption of AI tools and platforms, allowing non-experts to participate in AI-related tasks and projects.

TypeScript 6.0 RC

The article announces the release of TypeScript 6.0 Release Candidate, highlighting new features like improved support for JavaScript modules, strict tuple types, and better error messages for common configuration issues.

Show HN: Reconstruct any image using primitive shapes, runs in-browser via WASM

I built a browser-based port of fogleman/primitive — a Go CLI tool that approximates images using primitive shapes (triangles, ellipses, beziers, etc.) via a hill-climbing algorithm. The original tool requires building from source and running from the terminal, which isn't exactly accessible. I compiled the core logic to WebAssembly so anyone can drop an image and watch it get reconstructed shape by shape, entirely client-side with no server involved.

Demo: https://primitive-playground.taiseiue.jp/ Source: https://github.com/taiseiue/primitive-playground

Curious if anyone has ideas for shapes or features worth adding.

Supertoast tables

This article introduces SuperToast, a new open-source library for creating interactive toast notifications in web applications. It highlights SuperToast's features, such as customizable styles, actions, and dismissal options, as well as its integration with popular frameworks like React and Vue.js.

It took four years until 2011’s iOS 5 gave everyone an emoji keyboard

The article discusses the author's decision to advertise emojis directly, acknowledging the potential risks involved, but explaining their reasons for doing so and highlighting the benefits they see in this approach.