Querying 160 GB of Parquet Files with DuckDB in 15 Minutes

zekrom Wednesday, December 24, 2025

Summary

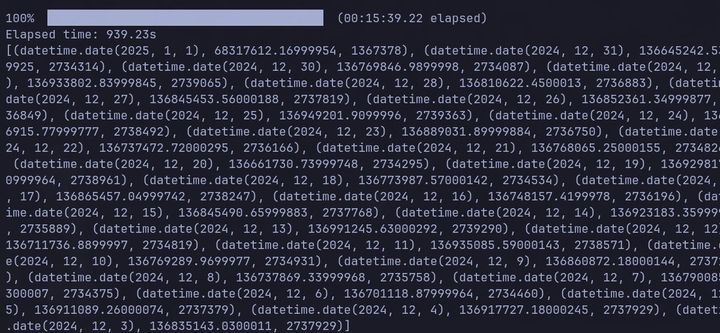

The article discusses the challenges of querying large volumes of Parquet data, and presents a solution using PySpark and Apache Spark to efficiently process and analyze 160 GB of data stored in Parquet format, demonstrating how to optimize performance and reduce processing time.

4

0

Summary

datamethods.substack.com