Building Reliable LLM Batch Processing Systems

andreeamiclaus Wednesday, January 28, 2026

Summary

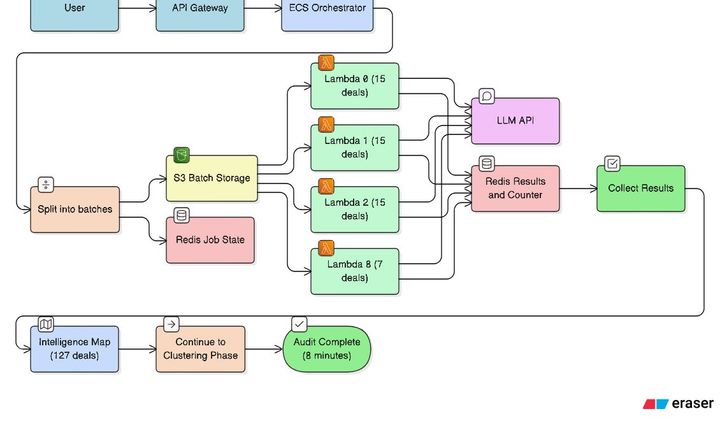

This article explores the challenges and best practices for building reliable batch processing pipelines for large language models (LLMs). It discusses key considerations such as system design, error handling, and performance optimization to ensure robust and scalable LLM-powered applications.

1

0

Summary

theneuralmaze.substack.com