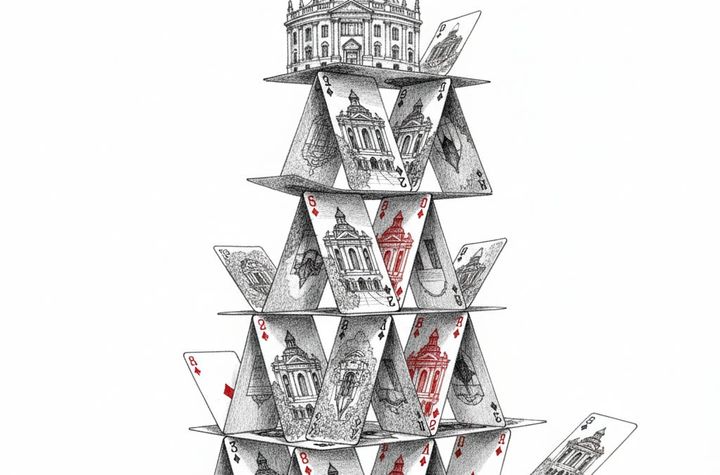

Attention is all you need to bankrupt a university

HR01 Sunday, February 22, 2026

Summary

The article discusses how the Transformer model, a neural network architecture focused on attention mechanisms, has become a dominant force in natural language processing, leading to breakthroughs in various applications but also raising concerns about its potential to exacerbate information overload and contribute to the decline of attention span.

4

0

Summary

hollisrobbinsanecdotal.substack.com